Some models (the majority of the models) have hyperparameters.

Hyperparameters are parameters that won’t be statistically calibrated but which are chosen arbitrarily by the user of the model.

Chosing the right hyperparameter value is a difficult task even more when the number of hyperparameters is important as it increases the number of possible models.

A good understanding of the model, the input and the output data is helpful to choose wizely the hyperparameters.

Another solution is to train the model with different combination of hyperparameters and test the results of each of them on a validation set and keep the one giving the best results. See Cross validation for more information on train, validation and test set.

The number of combinations to test is arbitrarily chosen depending on the time and computation power available and the combinations may be chosen using two different methods.

Grid search defines for each hyperparameter a set of values to try and then tests all possible combinations of the hyperparameters values.

For example for a Gradient Boosting algorithm, if I want to try:

Then grid search will test all the possible combination of these hyperparameters (ie 72 combinations).

Random search will test a given number of combinations randomly drawn from specified probabilistic distributions.

For example for a Gradient Boosting algorithm, if I want to try:

Where:

Then grid search will test \(n\) (\(n\) being chosen by the user) randomly drawn combination of these hyperparameters.

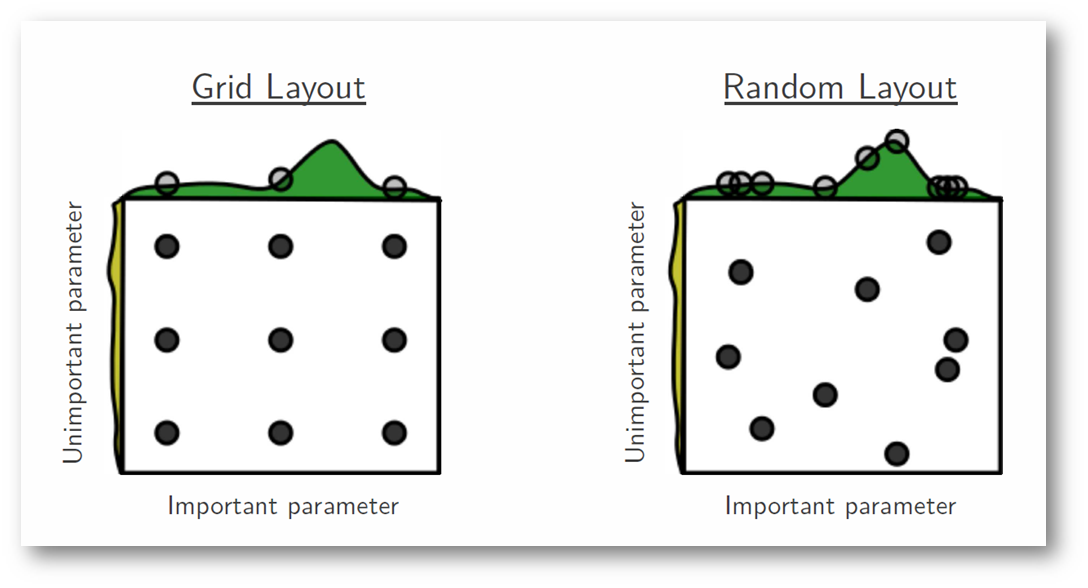

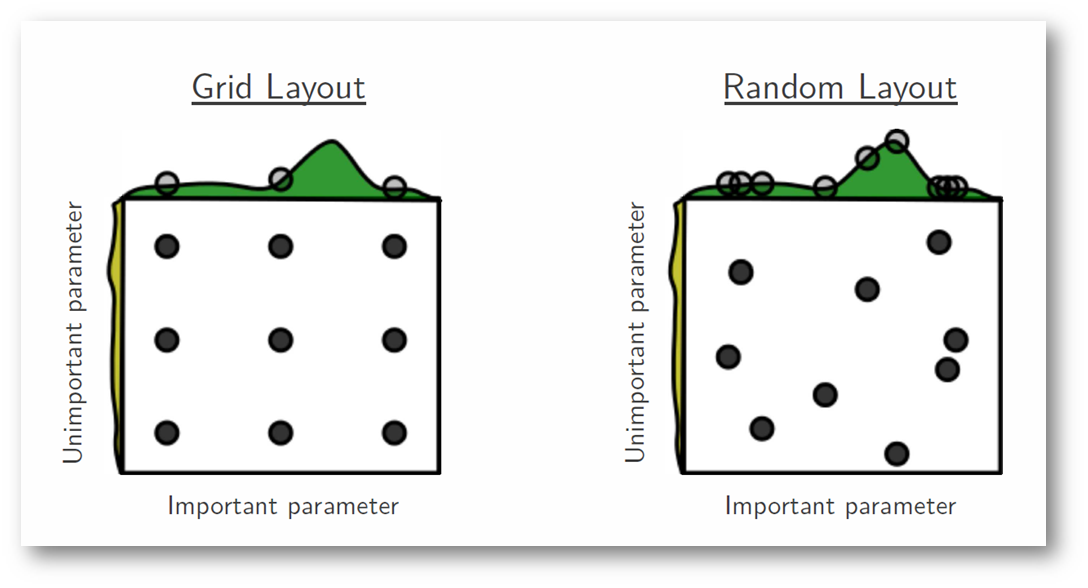

In general it is better to use random search as it will test more possible values for each hyperparameters.

Here is a visual proof of this:

Core illustration from Random Search for Hyper-Parameter Optimization by Bergstra and Bengio. It is very often the case that some of the hyperparameters matter much more than others (e.g. top hyperparam vs. left one in this figure). Performing random search rather than grid search allows you to much more precisely discover good values for the important ones. (text from CS231n course).

See: