The notation is different from the one we used (the one coming from the Udacity Deep Reinforcement Learning Nanodegree).

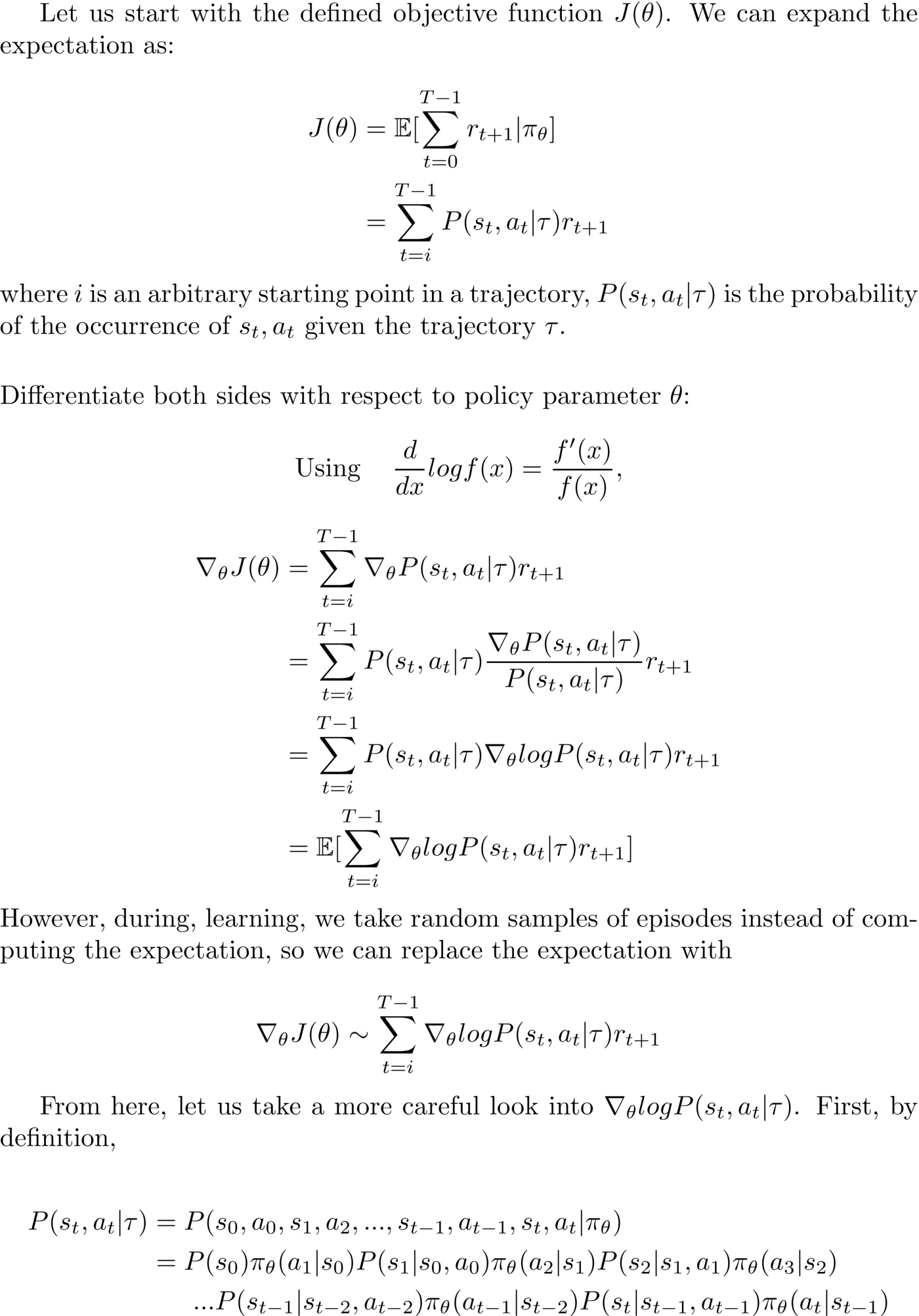

Here the probability of getting a trajectory from policy \(\pi_\theta\) that we noted \(P(\tau, \theta)=P(\tau \vert \theta)\) is expand as \(P(s_t, a_t \vert \tau)\).

This notation is a little bit misleading as \(\tau\) is a trajectory hence deterministic. It is implicitly \(P(s_t, a_t \vert \tau)P(\tau \vert \theta)\) which is \(P(\tau \vert \theta)\) for \((s_t, a_t) \in \tau\).

Also if we expand \(\tau\) using our notation we have:

\[P(\tau \vert \theta) = P(s_0, a_0, s_1, a_1, \ldots, a_H, s_H, s_{H+1} \vert \pi_\theta)\]And in the alternative notation we have:

\[P(s_t, a_t \vert \tau) = P(s_0, a_0, s_1, a_1, \ldots, s_t, a_t, \ldots, a_H, s_H, s_{H+1} \vert \pi_\theta)\]

See: