RNN for Recurent Neural Network and LSTM for Long Short Term Memory are sequential neural networks used mainly in NLP.

The sequential structure of these models takes sequential data as input and maintain an hidden layer \(h\) which is sequentially updated by each element of the input sequence and which contains all the information of the precedent element of the sequence.

The hidden layer \(h\) is in fact a sequence of hidden layers: \(h = (h_1, \ldots, h_n)\).

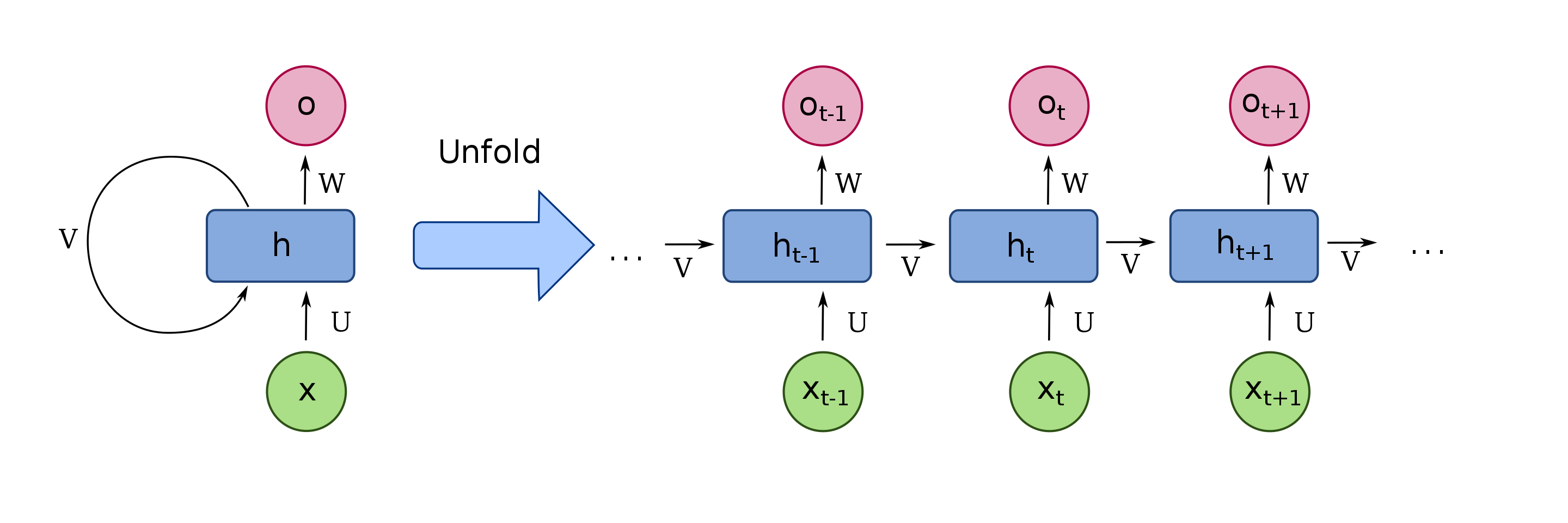

Here is the architecture of an RNN:

The left part of the graph is the synthetic view of the model and the right part is the unfold view where we can see every updates of the sequence.

Each input data \(x_t\) updates the input layer \(h_{t-1}\) to \(h_t\) and generate an output \(o_t\) (for translation for example). For task with a unique output such as sentiment analysis, only the last output \(o_n\) is used.

The formula that link \(h_{t-1}\) and \(x_t\) to \(h_t\) and \(o_t\) is:

\[\begin{eqnarray} h_t &&= \sigma_h (U_h x_t + W_h h_{t-1} + b_h)\\ o_t &&= \sigma_o (W_o h_t + b_o) \end{eqnarray}\]Where:

Long Short Term Memory is a special RNN that deals better with long memory (information from many steps back).

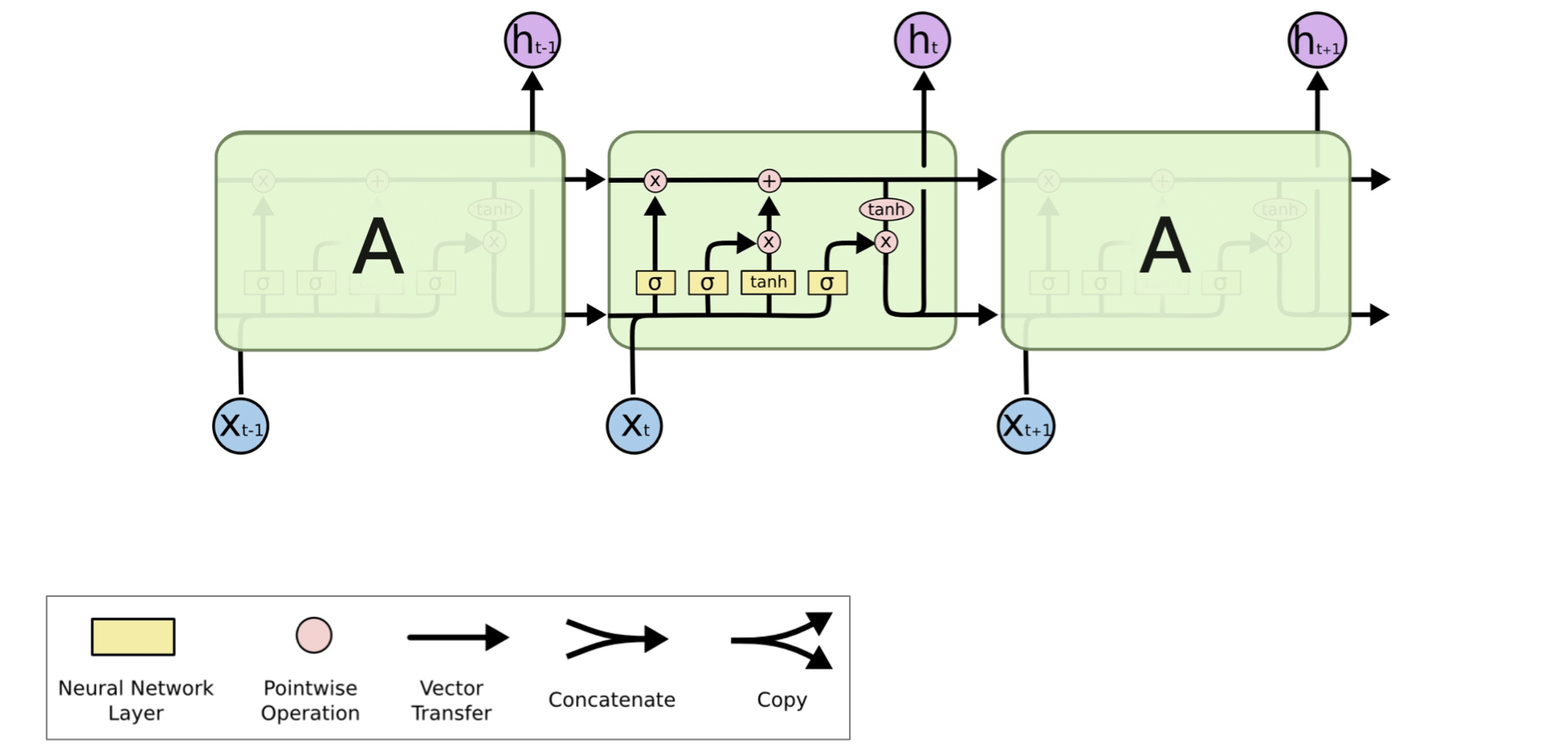

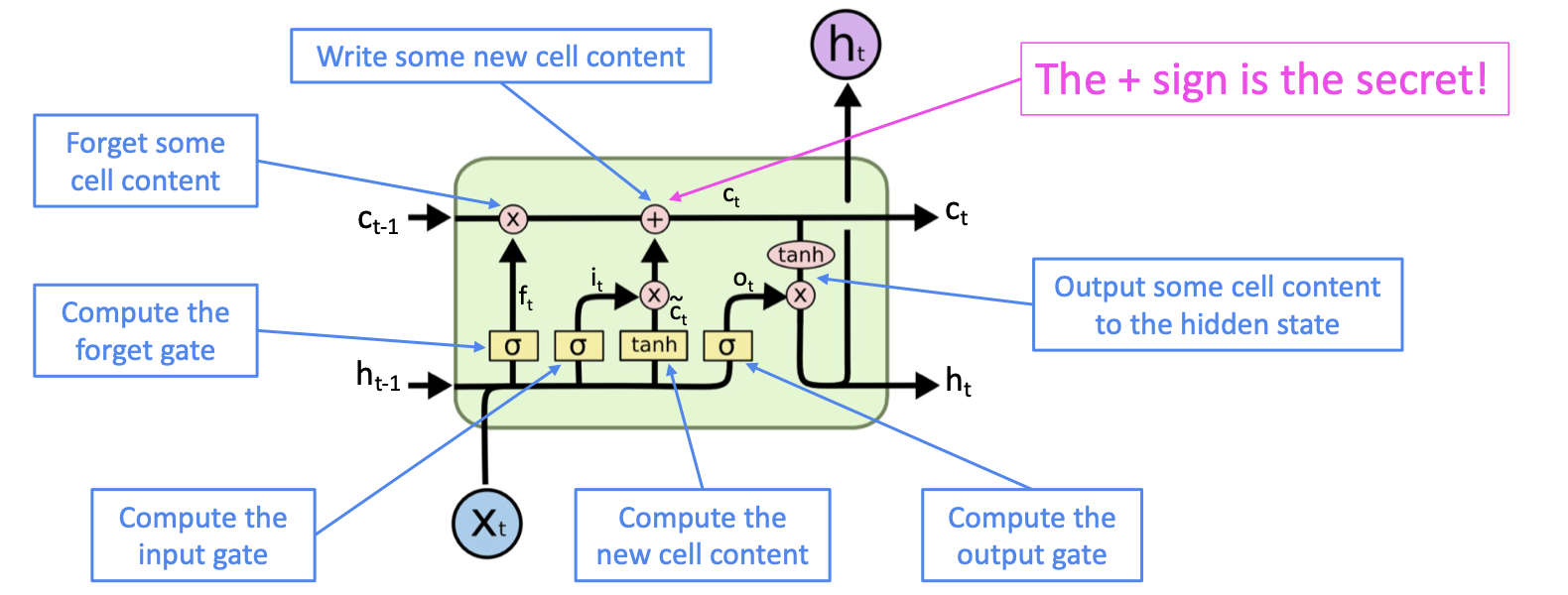

Here is the architecture of an LSTM:

A LSTM contains different gates and units to keep or erase long term memory and to use more or less information from last input.

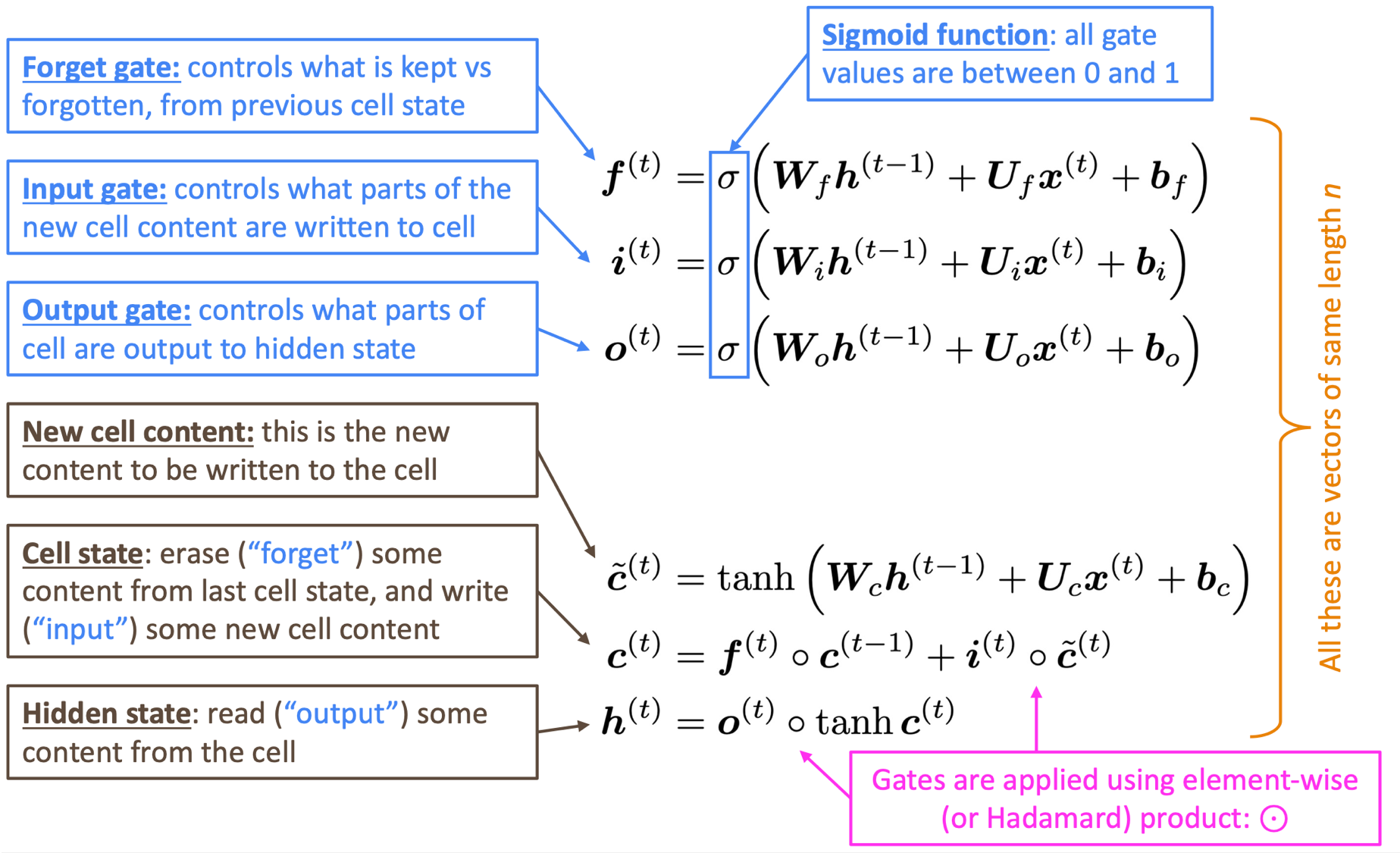

The formula that link the different blocks of an LSTM is:

And here are some visual explainations:

See: