lightGBM is another implementation of Gradient Boosting with tricks.

lightGBM uses histogram based algorithm to deal with continuous values instead of the classical pre-sort based algorithm.

It is faster than pre-sort based algorithm.

GOSS for Gradient-Based One-Side Sampling is a method to weight the features for the split choice. It gives greater probability to choose a feature with a high gradient for the given split rather than features with low gradient.

See the clear explanation on:

EFB for Exclusive Feature Bundling is a method to merge two sparse features into one. This is done by looking for non overlapping features (features which do not have non zero for the same samples) and then merging them, addind the maximum value of feature 1 to the value of feature 2 in order to be sure that they will be separated in different bucket.

See the clear explanation on:

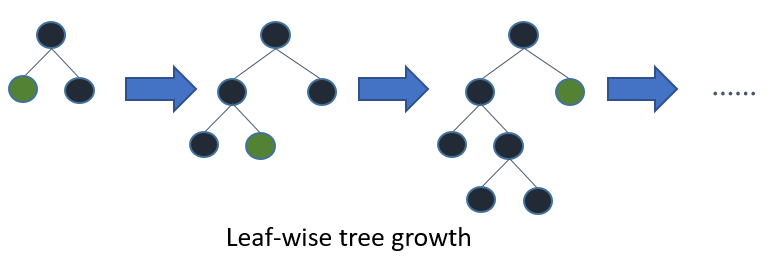

lightGBM uses leaf-wise tree growth.

It means that at each level in the tree, the algorithm won’t look for the best split for every leafs but for the best possible split among every leafs. Hence a tree can be very unbalanced.

Leaf-wise algorithms tend to achieve lower loss than level-wise algorithms but are prone to overfitting. Setting a max depth is hence very important.

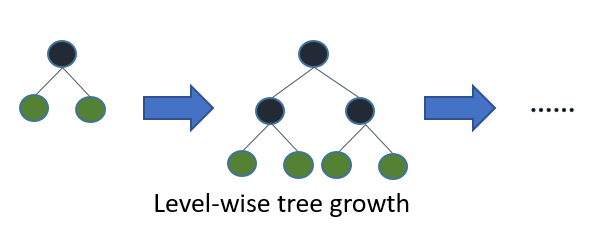

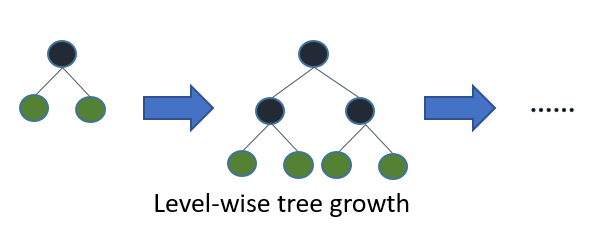

Level-wise:

Leaf-wise:

To split unordered Categorical Features, lightGBM takes advantage of the gradient boosting structure and sort the categories not given the output value (like in a classic Decision Tree) but given the sum of the gradient over the sum of the hessian.

See:

See: